The automotive industry appears to be on the verge of one of its greatest evolutionary changes, engendered by autonomous vehicle technologies. These technologies can offer significant benefits such as saving lives, reducing crashes, decreasing congestion, and minimising fuel consumption, as well as taking the driving task away from the unpredictable, unrealiable human driver.

The momentum for such technology innovation in the automotive industry comes as nearly 1.3 million people die in road crashes each year, an average of 3,287 deaths a day. The crashes not only cost lives but amount to a cost equivalent to US$518bn globally – a substantial portion of any country’s annual GDP.

Delphi, a supplier of technologies for the passenger car and CV markets, has been working on making the vehicle safer for many years, believing that increasingly advanced collision avoidance technology can predict potential hazards, helping roads to become increasingly safer for drivers.

Megatrends spoke to Mike Thoeny, Director, Electronic Controls Europe at Delphi Electronics & Safety, about the suppliers’ recent collision avoidance technology offerings, and what the future will bring for the increasingly safe and connected car.

Cars and trucks that can see

Although there are no plans to mandate autonomous emergency braking (AEB) technology in light vehicles, the fitment of AEB and lane departure warning (LDW) systems will be required in trucks and buses over 3.5t in Europe from Q4 2014, thanks to a European Commission mandate.

“Both technologies not only warn the driver of pending accidents, but are able to instruct the car to avoid a crash if the driver doesn’t notice an oncoming obstacle on time,” said Thoeny, explaining the significance of these technologies.

AEB systems like those offered by Delphi can reduce road accidents by up to 27%, and Thoeny noted that every truck OEM now has to take this technology seriously, and accelerate it within their product development plans. “So far, most OEMs have reacted quite positively to this news, as this technology is becoming increasingly important and accepted.”

Thoeny described such technologies as not only a step towards increased vehicle safety, but a step in the direction of the autonomous car. “Avoidance with braking is coming in now, but in the future, I can predict steering manoeuvres as part of vehicle systems. This may be a little more complicated with heavy duty trucks, but it has the potential to be a viable upcoming technology development.”

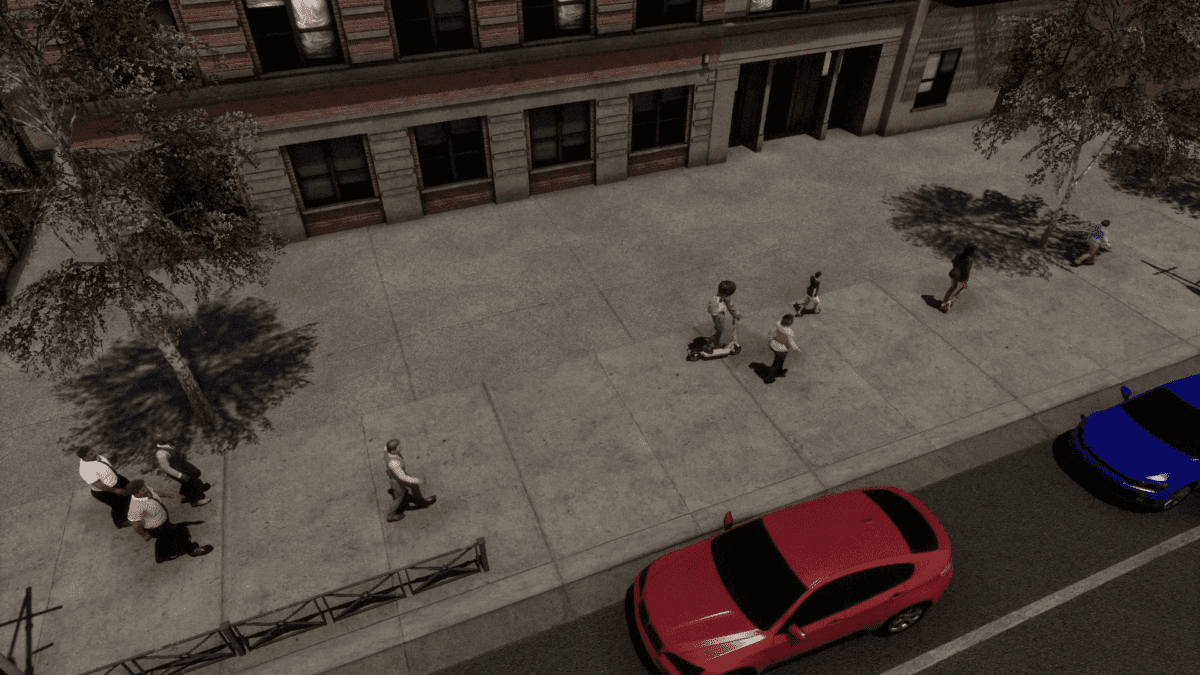

However, he also explained that future technologies, which will sense threats and obstacles around the vehicle, will need not only increased vision, but a fusion of sensing technologies to enable the car to have a 360 degree view of its surroundings.

Sensor fusion

Much of Delphi’s electronic safety development is based on sensing technology that looks at the world outside of the vehicle and senses potential issues and threats to the vehicle. “We concentrate on either warning the driver or doing something to help the driver. We’re moving from safety systems like ABS which is a great system, to the next step which is is to stop accidents before they even happen.”

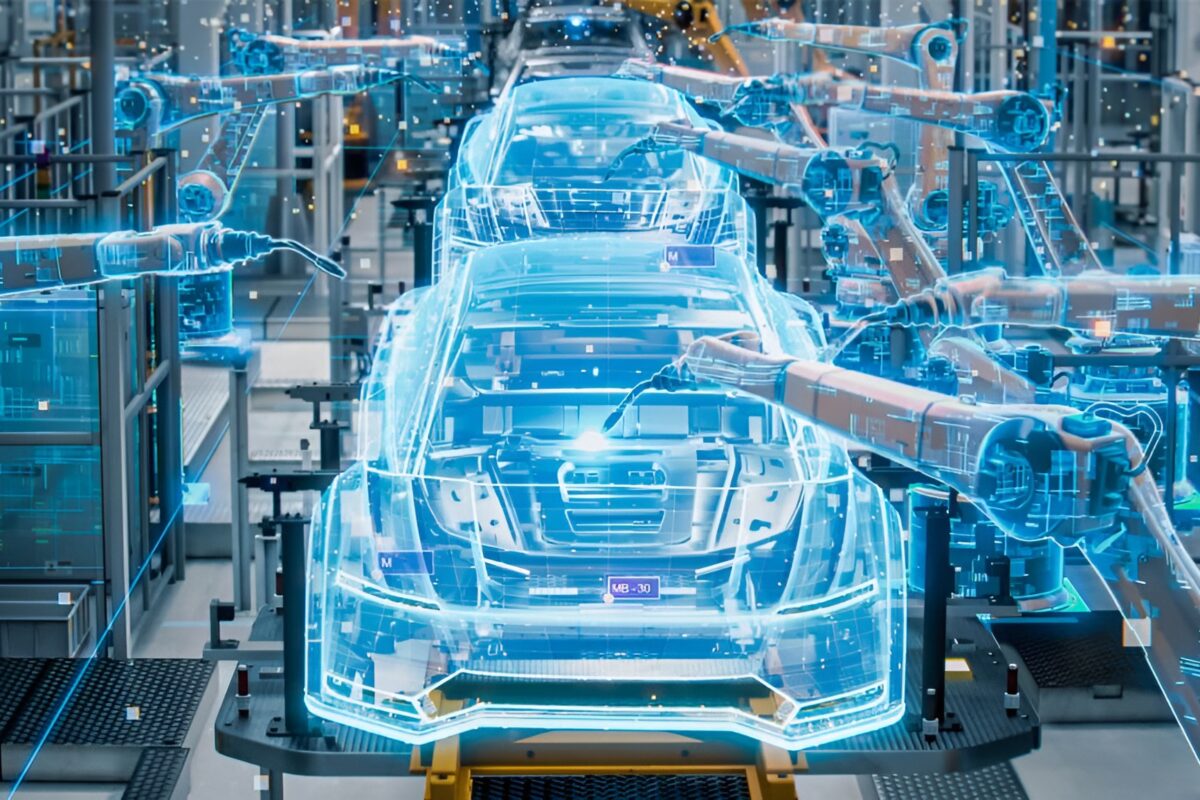

To do this, Delphi has been working on RACam, its integrated Radar and Camera System, which combines radar sensing, vision sensing and data in a single sophisticated module. Thoeny describes this as a ‘sensor fusion.’

The technology integration is helping to provide optimum value to vehicle manufacturers by enabling a suite of active safety features that includes adaptive cruise control (ACC), LDW, forward collision warning (FCW), low speed collision mitigation, and AEB for pedestrians and vehicles.

“We use radar technology and vision technology combined,” explained Thoeny. “For AEB, you can use vision, but the best technology from our perspective is radar because of its all-weather performance, and the fact that it can detect objects at further distances with more accuracy. For this reason, we are developing sensor fusion between radar and vision. I think this is where the truck market is heading.”

Getting down to specifics, the fusion technology allows both radar and camera to see the outside world 150-200 metres in front of the truck and can identity multiple objects with the radar. Using vision, it can also tell the driver exactly where the lane markings are and what the upcoming objects are while communicating with the radar. “Is it a truck, car, bridge overhead, pedestrian? It is important to distinguish which object it is as the driver might do something different or react in a different manner. If there is a clear indication of the objects in front, the driver can receive advanced notice of real potential accidents without also obtaining bad information and false alarms. Then, if the driver doesn’t react, the system can automatically apply the brakes.”

Leading the way

Even though sensor fusion technologies have already been on the road for passenger vehicles since 2007, Thoeny explained that the demands of the truck market are high and are helping to push the capability of these systems. Truck mandates, in particular, are pushing high performance system development. “Trucks need a longer reaction time because of their weight, for example, so having good systems that can recognise an accident well in advance of the accident itself is a clear need of the sector.”

Delphi is creating the technologies that are giving trucks and light duty vehicles a ‘360 view’, concludes Thoeny. “There is a serious case for being able to see more than just in front or behind, and we have this covered with our technologies.”

Rachel Boagey