On the 26 January 2022, the Law Commission and Scottish Law Commission published their final report making recommendations for the safe and responsible introduction of self-driving vehicles. The report recommends introducing a new Automated Vehicles Act, to regulate vehicles that can drive themselves. The proposed Act would do the following:

- draw a clear distinction between features which just assist drivers, such as adaptive cruise control, and those that are self-driving;

- introduce a new system of legal accountability once a vehicle is authorised by a regulatory agency as having self-driving features, and a self-driving feature is engaged, including that the person in the driving seat would no longer be a driver but a ‘user-in-charge’ and responsibility when then rest with the Authorised Self-Driving Entity (ASDE);

- mandate the accessibility of data to understand fault and liability following a collision.

The person in the driving seat of an autonomous vehicle (AV) would no longer be held responsible for accidents and infringements. Instead, under the proposed ‘Automated Vehicles Act’, sanctions would fall on the manufacturer or other body responsible for obtaining authorisation for road use.

The distinction between aids to assist drivers and genuine ‘self-driving’

The report recommends a clear distinction be made between automated features that merely assist human drives, for example adaptive cruise control, with those that take over entirely. Then, for example, there would be no change to the legal landscape where a driver crashes while using (non-adaptive) cruise control. Cruise control allows the driver to set the car to maintain a set speed without having to keep the accelerator depressed. It can help with fatigue on long journeys. However, it is still the driver’s responsibility to be in control of the vehicle. If a queue of traffic appears on the horizon, it is the driver’s job to deactivate the cruise control (by switching it all or depressing the brake pedal) and bring the car to a safe stop at the end of the traffic queue. If the driver instead ploughs into the back of the queue and causes an accident it is his fault notwithstanding he was using a driver aid. Having the cruise control on was no different to having a foot on the pedal: the driver still remains in control and responsible for keeping watch and stopping if a hazard appears.

Where, however, the car is genuinely ‘self-driving’ the position is radically different. The car is not simply ‘aiding’ the driver, with the driver keeping watch and remaining in control: the car is instead doing the ‘thinking’ and controlling of all key inputs that, before self-driving, were down to the driver. If a car driving itself makes a mistake, such as by swerving left to avoid an imagined hazard on the right, and thereby crashes into another car on the left when there was in fact no hazard on the right, it would in lay terms seem difficult to point the finger of blame at the driver. The machine had made the mistake and not the driver.

The distinction made by the Law Commissions would seem to be a sensible one based in factual reality: the only legal landscape still applies to human drivers, a different one may be needed for self-driving machines.

The new system of legal accountability for self-driving cars

Using assistance from a self-driving car, the person in the driving seat would no longer be a driver but instead would be a ’user-in-charge’, who could not be prosecuted for offences arising directly from the driving task. The user-in-charge would retain other manual driver duties not associated with the decisions involved in driving along the road, such as carrying insurance, checking loads or ensuring that children wear seat belts. Responsibility for driving mishaps would fall on the ‘authorised self-driving entity’ that had the vehicle authorised for road use. Regulatory sanctions would also be available to the ‘in-use regulator’, the Commissions propose.

The proposals build on the reforms introduced by the Automated and Electric Vehicles Act 2018 under which people who suffer injury or damage from a vehicle that was driving itself will not need to prove that anyone was at fault. No timetable has been set for any further legislative measures.

Under the report’s definition, an Authorised Self-Driving Entity (ASDE) could be the manufacturer or developer that puts the vehicle forward for categorisation as self-driving. The ASDE must show that it was closely involved in assessing the safety of the vehicle and have sufficient funds to respond to regulatory action and to organise a recall. The ASDE is responsible for the automated driving system design.

There are then two categories of self-driving car. First, the ‘user in charge’ (UIC) which is where a self-driving vehicle is driving, but there is a person in the driving seat, and the vehicle can pass back control to the driver. Second, the ‘no user in charge’ (NUIC) situation where the vehicle is entirely self-driving, there is no human driver, and only passengers. The report recommends that every NUIC vehicle should be overseen by a licensed NUIC operator, with responsibilities for dealing with incidents and (in most cases) for insuring and maintaining the vehicle.

A disadvantage could be that if something does go wrong, the ASDE and NUIC operator could end up blaming each other, with the consequence of greater uncertainty and possibly more litigation. There is also the risk for confusion between a user-in-charge situation on one hand, and no user-in-charge situation on the other. Drivers and fleets will need to be very clear about whether the driver is in charge, and responsible, or whether the machine is. In UIC situations, there could be scope for argument, and increased litigation, surrounding whether the vehicle or the driver was ‘in control’ at the time of an accident.

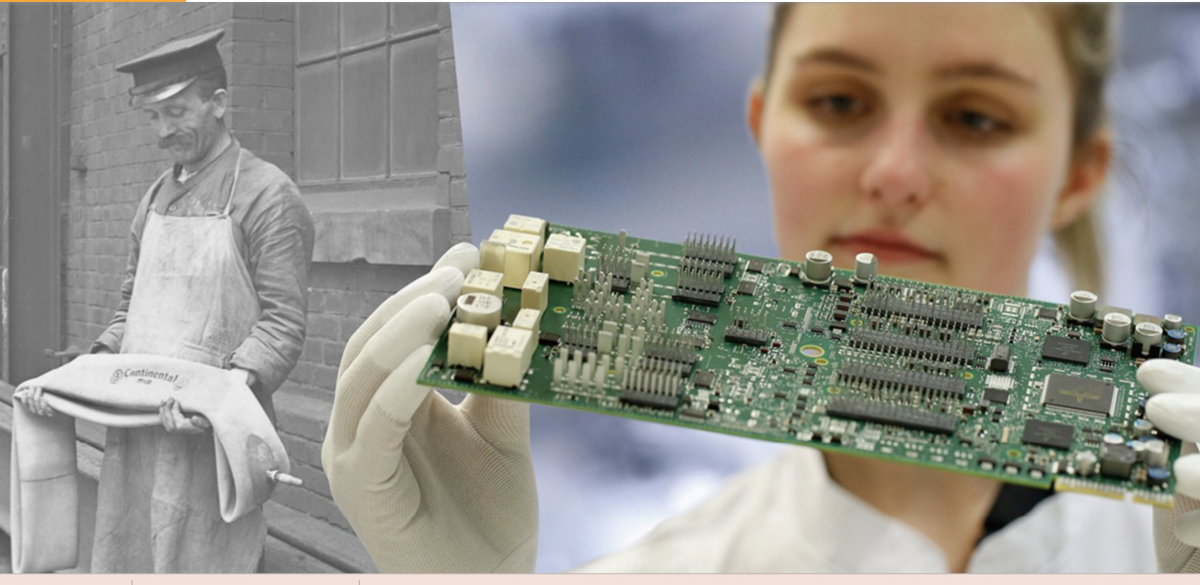

Acquisition and maintenance costs will be higher than the traditional fleet. Self-driving features will rely on a significant amount of computing ability and sensing equipment, on top of the base cost of the vehicle. While prices will reduce over time the price the upfront and maintenance investment will be a hurdle for fleet managers to consider.

Data

AVs will generate a huge amount of data on location, surroundings, route and systems. The report also recommends that this data will be needed in order to understand fault and liability following a collision and must be accessible. Potentially such data may assist in the event of collisions in being a more precise determining factor than the evidence of drivers or witnesses, which can often be incorrect. With that in mind, fleet staff may need to include data analysts or cybersecurity analysts ensuring data is protected from hackers. Fleet managers face the challenge of ensuring their software systems are both state-of-the-art and constantly updated to mitigate the potential for hacking.

Conclusion

The report is a welcome move to allow the law to catch up with the ever evolving self-driving vehicle sector. The law—legislation and case law—will continue to evolve to keep pace with the increasing complexities introduced by evolving technologies.

About the author: Chris Heitzman is Legal Director at Corclaim