From the stones used by our hunter-gatherer forebears to the ploughs of the agricultural era to the laptop I’m writing this article on, humans have used tools to get a range of jobs done. One consistent feature of those tools is that while we actively interacted with them, the tools were largely inanimate objects. That’s all starting to change as modern electronics are giving machines the ability to sense and respond to the users. Biometric sensing is the next big frontier in automotive human-machine interfaces (HMI).

To some degree, crude biometrics have been with us for some time now. Electronic stability controls (ESC) first appeared in the late-1990s and featured a range of sensors designed to detect the driver’s steering and speed inputs, the actual response of the vehicle and then execute corrective actions through a variety of actuators to help make the two match.

Sensing the amplitude and rate of the driver’s steering wheel input is quite straightforward. It’s a system that works well enough when the driver is expected to be fully in command of the vehicle. But what happens when the driver starts to become partially disengaged as we add some levels of automation to the driving task?

We now need much more sophisticated and nuanced sensing systems that can tell us more about the human occupant of the vehicle when they may not be fully involved in driving. True hands-free automation arrived in 2017 with the debut of the General Motors’ Super Cruise system. Despite allowing the driver to take their hands off the steering wheel during highway driving, as a Level 2 partially automated system, it still required the driver to watch the road and be ready to take control immediately.

A system like the steering wheel torque sensor used by Tesla to detect hands-on for its AutoPilot system wouldn’t work if the hands aren’t expected to be on the wheel. GM utilises an infrared emitter and camera system developed by Seeing Machines that illuminates the driver’s face to determine head position and gaze. If the driver looks away from the road for more than a few seconds at highway speeds, the system alerts the driver to take back control of the vehicle. If the driver fails to respond promptly, Super Cruise will bring the vehicles to a safe stop.

The same type of system can be utilised even in manually driven vehicles to help detect drowsiness or impairment. These are leading causes of crashes and fatalities. Since modern vehicles use a range of by-wire actuation systems, a biometric system that can detect inattention for any reason and automatically bring the vehicle to a safe stop could be a huge safety benefit.

We now need much more sophisticated and nuanced sensing systems that can tell us more about the human occupant of the vehicle when they may not be fully involved in driving

Even these more capable biometric sensors are just the beginning. The infrared sensors being used now are relatively low-resolution devices meant to detect head and eye position. However, hundreds of millions of people around the world already carry a device in their pocket with a much higher resolution version of the same technology that can authenticate a user’s identity.

Apple introduced the iPhone X in 2017 with an infrared sensing system to unlock the device when the registered owner looked at it. Since the version used by Apple is intended for secure authentication, it has significantly higher resolution than the sensors being used for gaze detection. Adoption of higher resolution units for vehicles could allow for keyless authentication and add an extra layer of security to prevent theft. A number of major automotive suppliers have also demonstrated systems using either infrared or standard visual light RGB cameras to detect the driver and automatically enable stored preferences or even download them from the cloud for shared vehicles.

In an era of a global pandemic, new types of biometric sensing can also provide much more information about the well being of vehicle occupants. In 2012, Ford demonstrated a system it dubbed driver workload monitoring. Using a range of sensors in the steering wheel and seat belt, the system was designed to detect stress levels in the driver. A piezoelectric sensor in the seat belt picked up respiration while infrared sensors on the wheel measured both the ambient and driver’s temperatures and conductive sensors measured heart rate.

If the driver’s stress level increased in combination with data from the vehicle’s sensors indicating enhanced workload such as traffic or other conditions, the system could automatically enable do not disturb mode in the infotainment and reduce audio volume.

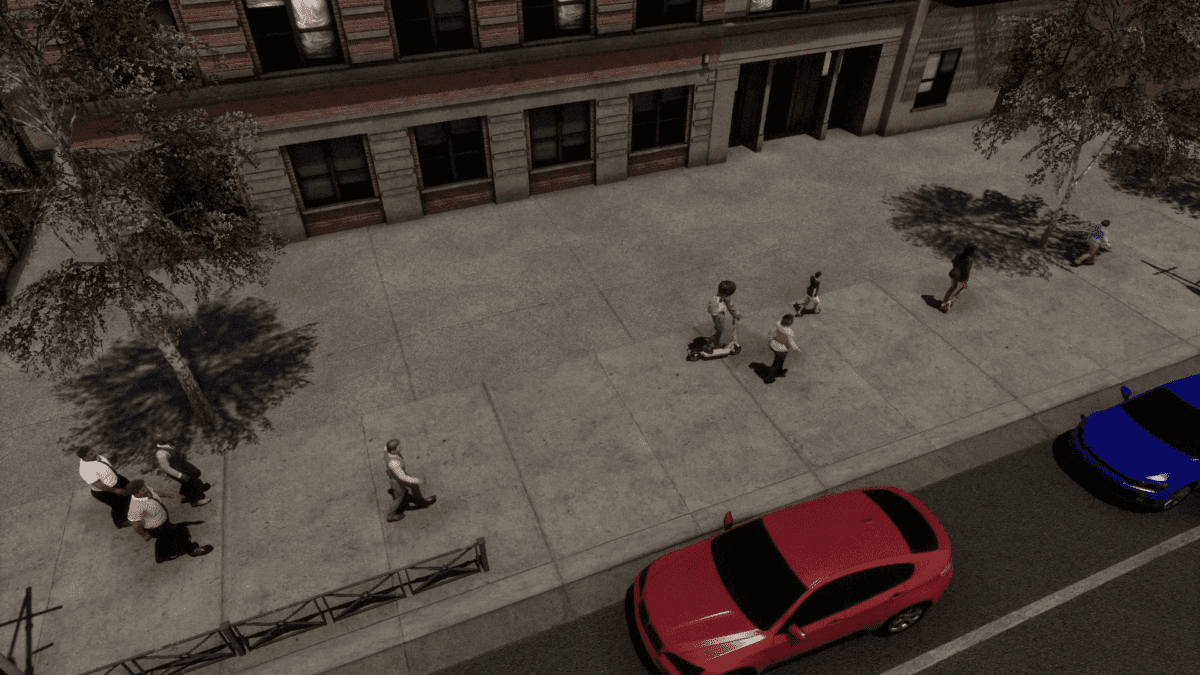

In a future where robotaxis become common, similar sensors could have a variety of applications. Thermal imaging sensors can be used to detect passengers with a fever and automatically trigger a sanitising cycle with a UV-C lamp after the passenger gets out of the vehicle. The passenger could also be notified that an elevated temperature was detected that they may not be aware of and suggest they get a medical exam.

Passengers in automated vehicles may also face increased probability of motion sickness; temperature sensors may detect a change in the passenger state associated with motion sickness and automatically adjust the vehicle’s driving behaviour and climate control to mitigate any effects to the passengers. If a passenger does become ill or smokes in one of these shared mobility vehicles, camera sensors will detect this and send the vehicle back to the depot for cleaning. If something is left behind when a passenger gets out, the vehicle can stay in place and automatically send a notification to the passenger to come back for their item.

The future is full of vehicles that sense the users and automatically adapt behaviour based on who those occupants are, where they are and what they are doing rather than the other way around

For the foreseeable future, automated vehicles will still be coexisting with human-driven vehicles and will likely be involved in crashes even if the software doesn’t cause them. Thus shared mobility vehicles will have to offer occupant protection systems such as seat belts and airbags. However, since many of these vehicles are likely to have non-traditional configurations such as carriage-style, rotating or reclining sleeper seats, offering proper protection regardless of the direction of impact will be important. Biometric sensors can detect where passengers are sitting and in what posture to automatically adjust the deployment of passive safety devices for optimal protection.

The future is full of vehicles that sense the users and automatically adapt behaviour based on who those occupants are, where they are and what they are doing rather than the other way around.

Sam Abuelsamid is Principal Research Analyst, Guidehouse Insights. He leads the group’s E-Mobility Research Service, with a focus on transportation electrification, automated driving and mobility services.